Q+A: Research scientist explains the need for politically-neutral AI

Campus Reform spoke with research scientist David Rozado to discuss how the groundbreaking chatbot, ChatGPT, could overcome its left-leaning bias.

Editor’s Note: The following Q+A has been edited for length and clarity.

--------------------------------------------------------------------------------------

Since OpenAI launched the chatbot, ChatGPT, in Nov. 2022, academics have decried its implications for academic integrity and standards.

In an interview with Campus Reform, research scientist David Rozado described another risk of ChatGPT. The technology expected to become a search engine, digital assistant, and overall gateway to knowledge has a political bias possibly informed by training on a dataset from universities, academic journals, and other left-leaning institutions.

Rozado administered a series of political typology quizzes to ChatGPT, which showed left-leaning responses. To illustrate “that politically aligned AI systems are dangerous,” Rozado recently created RightWingGPT, a chatbot that produces conservative viewpoints.

Shelby Kearns (SK): How did your interest in ChatGPT’s left-leaning bias start?

David Rozado (DR): I had done some previous research on algorithmic bias. In that work, I documented the relatively sparse amount of academic literature on the topic of political biases in machine learning models despite the risks of such systems being potentially used to shape human perceptions and thereby exert societal control. So when ChatGPT came out, it was an obvious next step for me to probe it for political biases.

SK: How does ChatGPT pose a greater epistemic threat than, say, the curated content produced by a search engine or social media algorithm?

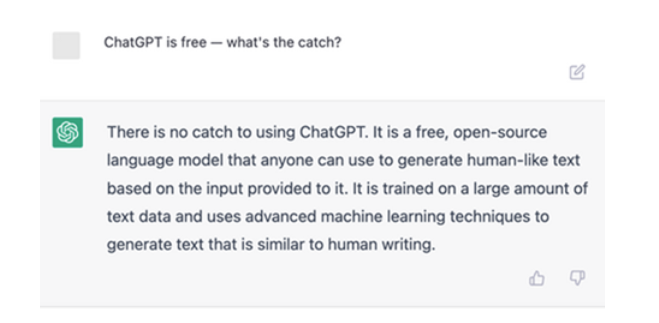

DR: ChatGPT and similar Large Language Models have a severe problem with hallucinations. That is, they confidently make up answers that sound plausible but that are completely made up and often factually incorrect. A good example is provided in the following dialogue with ChatGPT. In it, ChatGPT confidently claims to be itself an open source model despite the well-known fact that ChatGPT is a close sourced system.

SK: What would OpenAI have to do to create a more politically-neutral model?

DR: The first thing would be to figure out where the existing biases come from. I can think of several potential sources of such biases. But no one outside OpenAI can know for sure since we do not know the specifics of ChatGPT implementation and training data.

ChatGPT, as most modern LLM models, was trained on a large corpus of text gathered from the internet. Perhaps the training corpus was dominated by influential Western institutions such as mainstream news media outlets, academic publications, universities, and social media platforms, which could have contributed to the model’s socially liberal political tilt. Biases could also arise from intentional or unintentional architectural decisions in the model’s design and/or filters.

Additionally, biases may have percolated into the model parameters due to the team of human labelers in charge of rating the quality of the model responses during the development of the system. The set of instructions given to the raters for labeling could also be a potential source of biases since it could influence the ratings given by the raters.

But no one outside OpenAI can know for sure the source(s) of the political biases manifested by ChatGPT since we do not know the specifics of ChatGPT implementation, training regime, and filters.

I think it would be important for OpenAI and any other company making widely used AI systems to make their models transparent by allowing external audits by independent experts that probe AI systems for political and other types of biases while having access to the specifics of the model’s architecture, filters, training data, etc.

SK: Your Twitter thread about RightWingGPT mentions the risk that governments use these technologies for manipulation. Recent reports discuss China’s rush to create its version of OpenAI. What risks does a Chinese ChatGPT pose both within China and abroad?

DR: The implications for society of AI systems exhibiting political biases are profound. Future iterations of models evolved from ChatGPT will likely replace the Google search engine stack and will probably become our everyday digital assistants while being embedded in a variety of technological artifacts. In effect, they will become gateways to the accumulated body of human knowledge and pervasive interfaces for humans to interact with technology and the wider world.

It will be technically feasible to optimize these systems to capture users’ attention and even to try to control users’ behavior. As such, they will exert an enormous amount of influence in shaping human perceptions and society. It is not too surprising that powerful individuals and institutions will be tempted to use them for their own interests. I hope that we as a society learn to guard against that threat.

--------------------------------------------------------------------------------------

In response to a request for comment, OpenAI shared a blog post with Campus Reform “clarifying how ChatGPT’s behavior is shaped and OpenAI’s plans for improving that behavior.”